Jersey Jazzman: NJ's Student Growth Measures (SGPs): Still Biased, Still Used Inappropriately

What follows is yet another year's worth of data analysis on New Jersey's student "growth" measure: Student Growth Percentiles (SGPs).

And yet another year of my growing frustration and annoyance with the policymakers and pundits who insist on using these measures inappropriately, despite all the evidence.

Because it's not like we haven't looked at this evidence before. Bruce Baker started back in 2013, when SGPs were first being used in making high-stakes decisions about schools and in teacher evaluations; he followed up with a more formal report later that year.

Gerald Goldin, a professor emeritus at Rutgers, expressed his concerns back in 2016. I wrote about the problems with SGPs in 2018, including an open letter to members of the NJ Legislature.

But here we are in 2019, and SGPs continue to be employed in assessments of school quality and in teacher evaluations. Yes, the weight of SGPs in a teacher's overall evaluation has been cut back significantly, down to 5 percent -- but it's still part of the overall score. And SGPs are still a big part of the NJDOE's School Performance Reports.

So SGPs still matter, even though they have a clear and substantial flaw -- one acknowledged by their creator himself -- that renders them invalid for use in evaluating schools and teachers. As I wrote last year:

It's a well-known statistical precept that variables measured with error tend to bias positive estimates in a regression model downward, thanks to something called attenuation bias. Plain English translation: Because test scores are prone to error, the SGPs of higher-scoring students tend to be higher, and the SGPs of lower-scoring students tend to be lower.

Again: I'm not saying this; Betebenner -- the guy who invented SGPs -- and his coauthors are:It follows that the SGPs derived from linear QR will also be biased, and the bias is positively correlated with students’ prior achievement, which raises serious fairness concerns....

The positive correlation between SGP error and latent prior score means that students with higher X [prior score] tend to have an overestimated SGP, while those with lower X [prior score] tend to have an underestimated SGP.(Shang et al., 2015)

Here's an animation I found on Twitter this year* that illustrates the issue:

Test scores are always -- always -- measured with error. Which means that if you try to show the relationship between last year's scores and this year's -- and that's what SGPs do -- you're going to have a problem, because last year's scores weren't the "real" scores: they were measured with error. This means the ability of last year's scores to predict this year's scores is under-estimated. That's why the regression line in this animation flattens out: as more error is added to last year's scores, the more the correlation between last year and this year is estimated as smaller than what it actually is.**

Again: The guy who invented SGPs is saying they are biased, not just me. He goes on to propose a way to reduce that bias which is highly complex and, by his own admission, never fully addresses all the biases inherent in SGPs. But we have no idea if NJDOE is using this method.

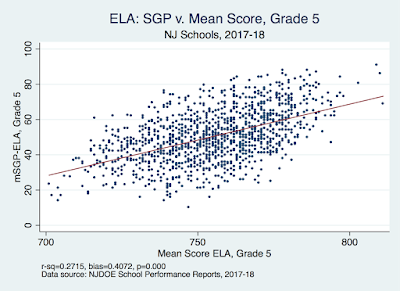

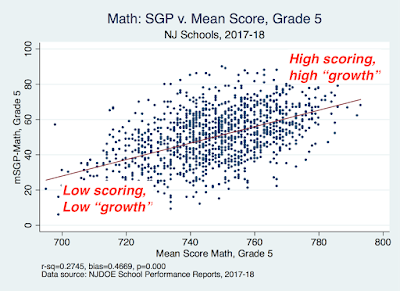

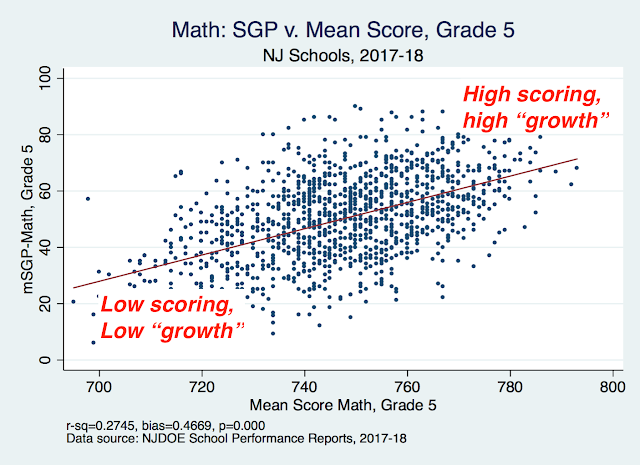

What I can do instead, once again, is use NJDOE's latest data to show these measures remain biased: Higher-scoring schools are more likely to show high "growth," and lower-scoring schools "low" growth, simply because of the problem of test scores being measured with error.

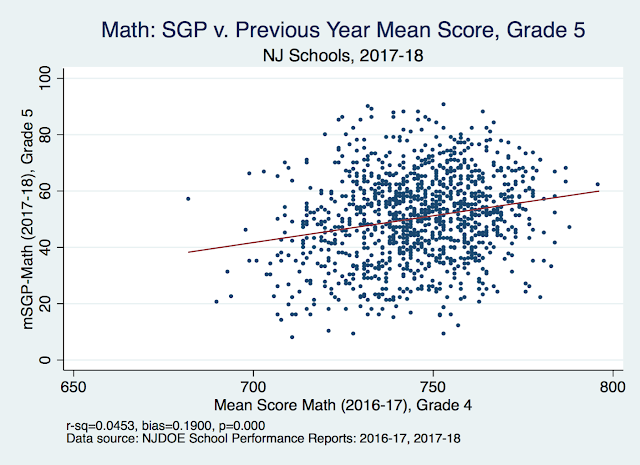

Here's an example:

There is a clear correlation between a school's average score on the Grade 5 math test and its Grade 5 math mSGP***. The bias is such that if a school has an average (mean) test score 10 points higher than another, it will have, on average, an SGP 4.7 points higher as well.

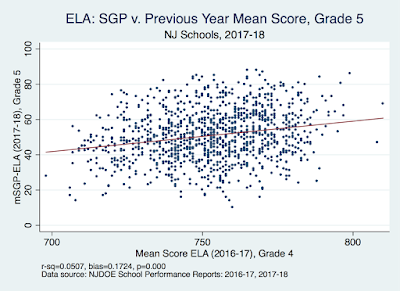

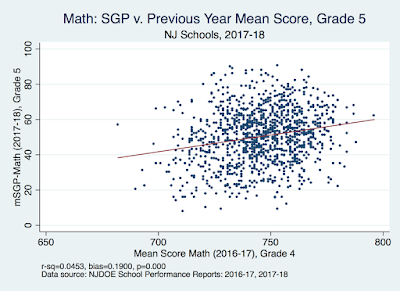

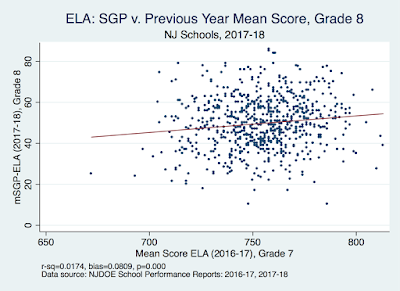

What happens if we compare this year's SGP to last year's score?

The bias is smaller but still statistically significant and still practically substantial: a 10-point jump in test scores yields a 2-point jump in mSGPs.

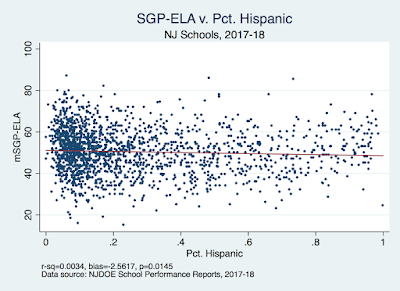

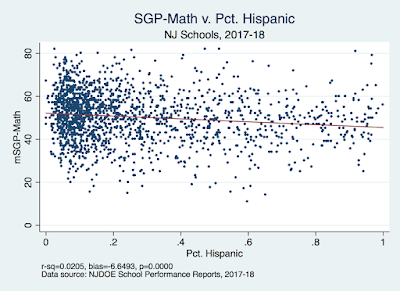

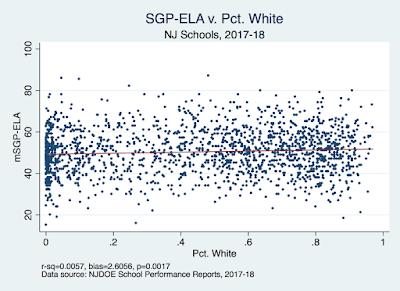

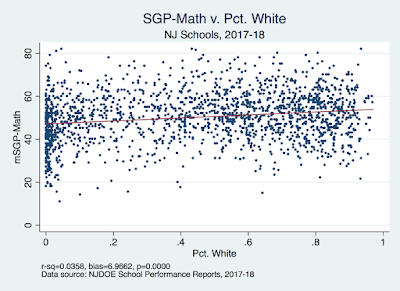

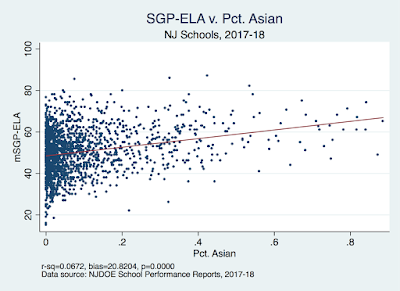

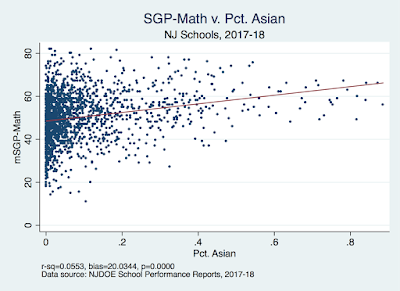

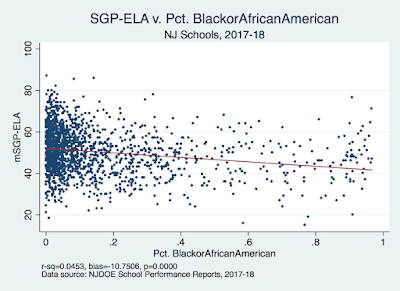

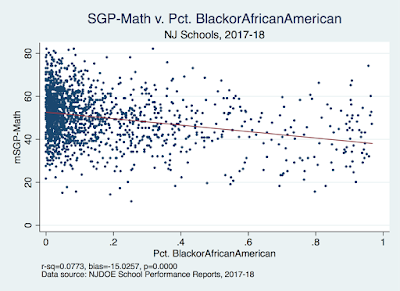

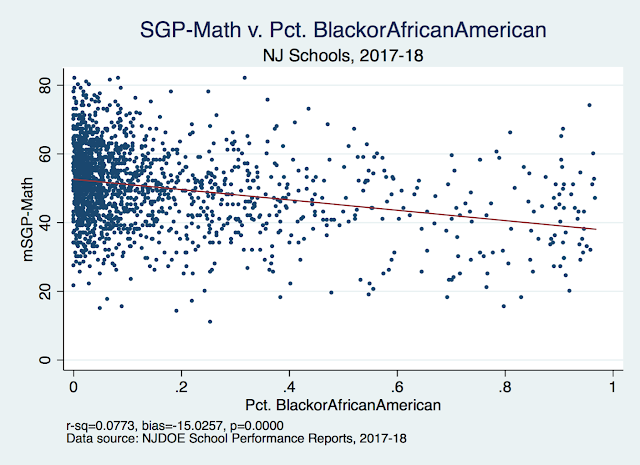

Now, we know test scores are correlated with student characteristics. Those characteristics are reported at the school level, so we have to compare them to school-level SGPs. How does that look? Let's start with race.

Schools with larger percentages of African-American students will see their SGPs fall.

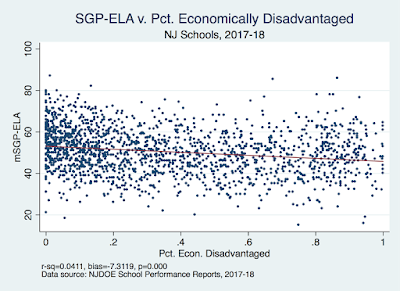

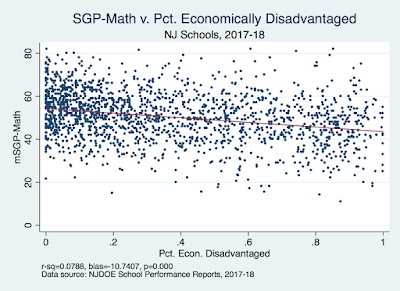

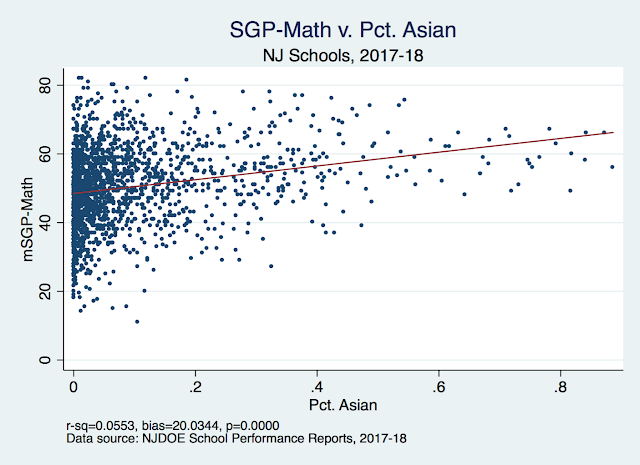

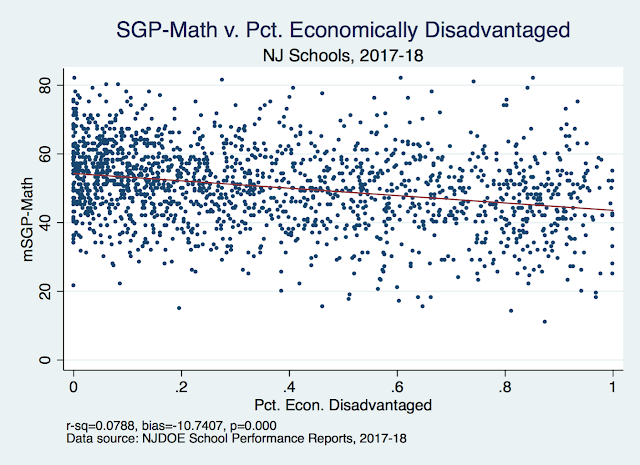

But schools with larger percentages of Asian students will see their SGPs rise. Remember: when SGPs were being sold to us, we were told by NJDOE leadership at the time that these measures would "fully take into account socio-economic status." Is it true?

There is a clear and negative correlation between SGPs and the percentage of a school's population that is economically disadvantaged.

Let me be clear: in my own research work, I have used SGPs to assess the efficacy of certain policies. But I always acknowledge their inherent limitations, and I always, as best as I can, try to mitigate their inherent bias. I think this is a reasonable use of these measures.

What is not reasonable, in my opinion, is to continue to use them as they are reported to make judgments about school or school district quality. And it's certainly unfair to make SGPs a part of a teacher accountability system where high-stakes decisions are compelled by them.

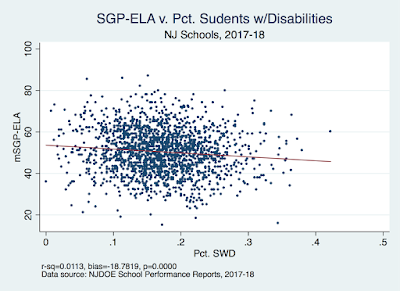

I put more graphs below; they show the bias in SGPs varies somewhat depending on grade and student characteristics. But it's striking just how pervasive this bias is. The fact that this can be shown year after year should be enough for policymakers to, at the very least, greatly limit the use of SGPs in high-stakes decisions.

I am concerned, however, that around this time next year I'll be back with a new slew of scatterplots showing exactly the same problem. Unless and until someone in authority is willing to acknowledge this issue, we will continue to be saddled with a measure of school and teacher quality that is inherently flawed.

* Sorry to whomever made it, but I can't find an attribution.

** By the way: it really doesn't matter that this year's scores are measured with error; the problem is last year's scores are.

*** The "m" in "mSGP" stands for "median." Half of the school's individual student SGPs are above this median; half are below.

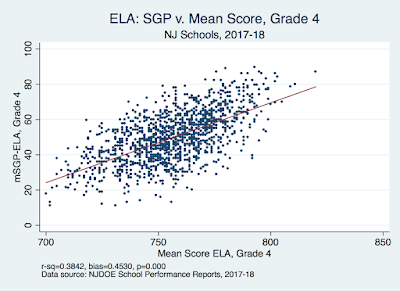

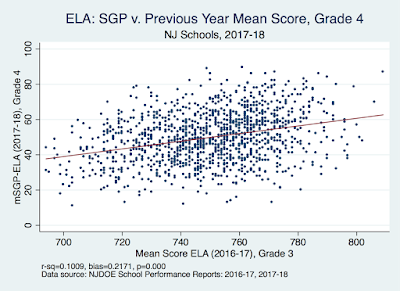

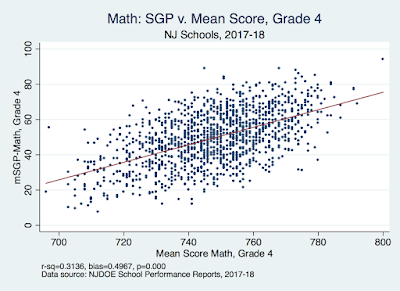

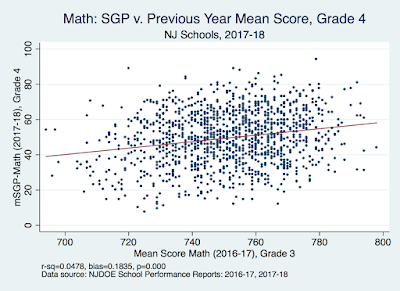

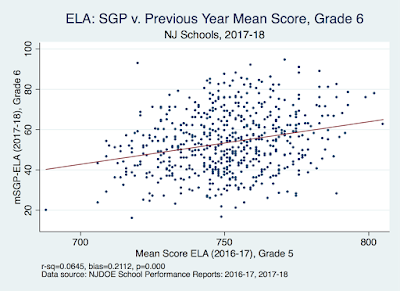

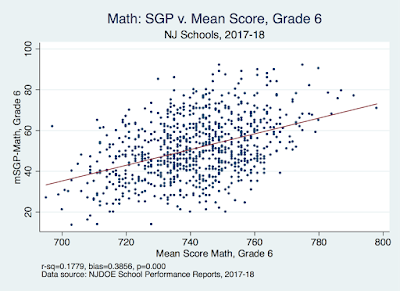

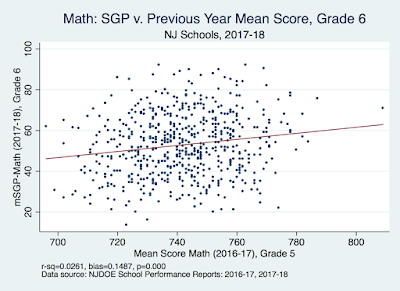

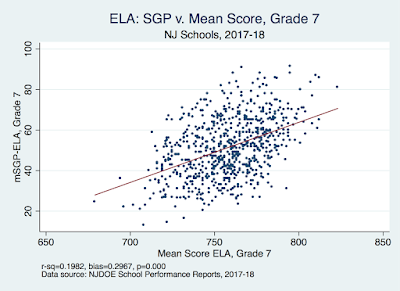

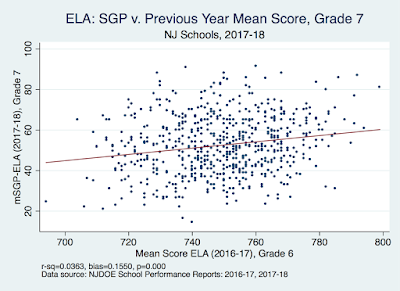

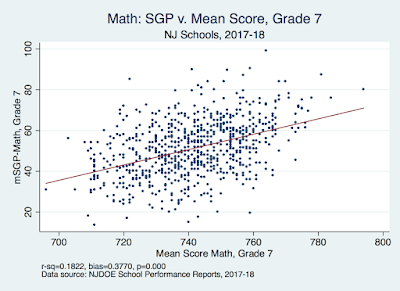

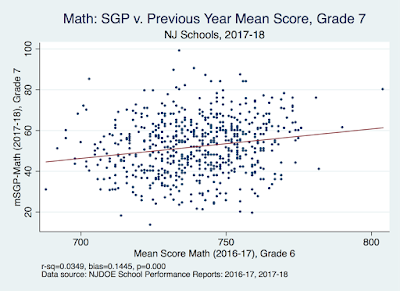

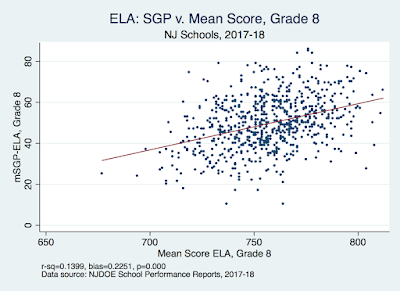

ADDITIONAL GRAPHS: Here are the full set of scatterplots showing the correlations between test scores and SGPs. SGPs begin in Grade 4, because the first year of testing is Grade 3, which becomes the baseline for "growth."

For each grade, I show the correlations between this year's test score and this year's SGP in math and English Language Arts (ELA). I also show the correlation between this year's SGP and last year's test score. In general, the correlation with last year's score is not as strong, but still statistically significant.

Here's Grade 4:

Grade 5:

Garde 6:

Grade 7:

I only include ELA for Grade 8, as many of those students take the Algebra I test instead of the Grade 8 Math test.

Here are correlations on race for math and ELA, starting with Hispanic students:

White students:

Asian students:

African American students:

Here are the correlations with students who qualify for free or reduced-price lunch:

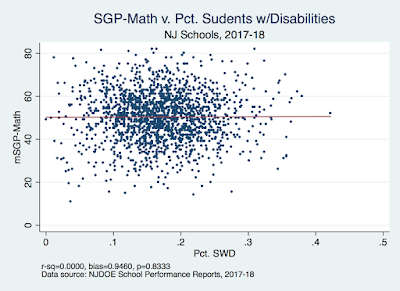

Finally, students with learning disabilities:

This last graph shows the only correlation that is not statistically significant; in other words, the percentage of SWDs in a school does not predict that school's SGP for math.

This blog post has been shared by permission from the author.

Readers wishing to comment on the content are encouraged to do so via the link to the original post.

Find the original post here:

The views expressed by the blogger are not necessarily those of NEPC.